Hybrid Programming

Now that I have MPI-(Messgae Passing Interface) running on my cluster, it’s time to do some hybrid programming with OpenMP.

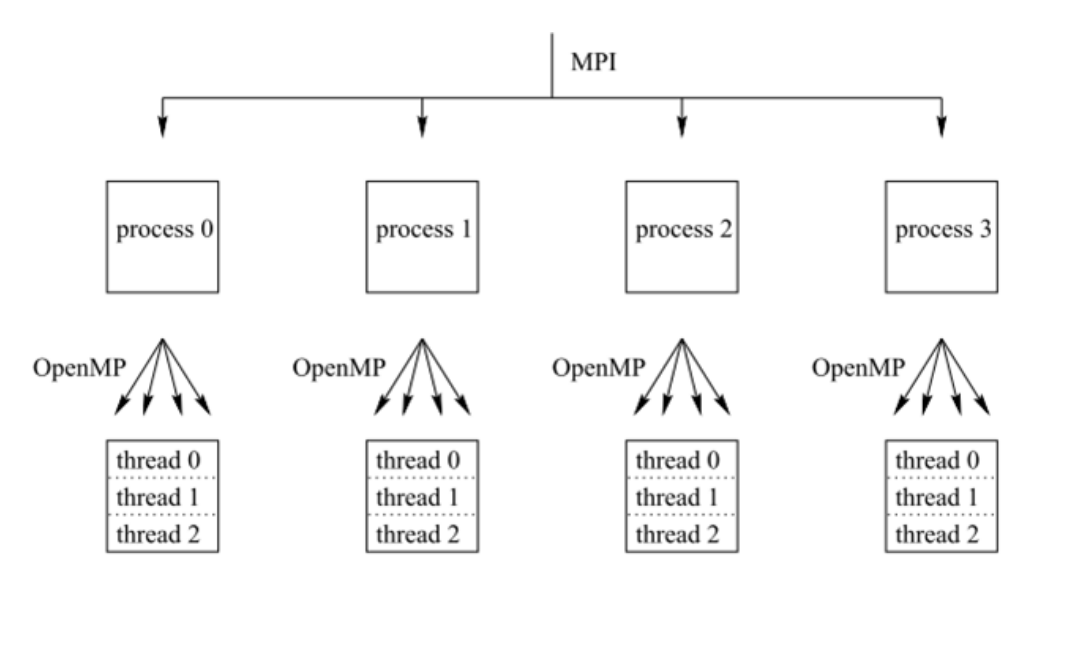

For those of you unfamiliar with MPI, it is a communication protocol for programming parallel computers at the processor level (i.e. between nodes), generally with a distributed memory model. OpenMP, on the other hand, is used for parallel programming at the thread level. Even though MPI was was designed for message passing in distributed systems, if you don’t have a cluster available, you can still experiment on your own multi-core machine, you just may not see a performance increase. OpenMP, however, was designed to exploit thread level parallelism on shared memory machines, so you can incorporate OpenMP into your locally run programs to gain some performance immediately.

Here’s a simple visual representation:

Let’s start by writing a basic MPI Hello World program. Check out my Github for code examples.

hello_mpi.c

#include <stdio.h>

#include "mpi.h"

int main(int argc, char *argv[]) {

int numprocs, rank;

MPI_Init(&argc, &argv);

MPI_Comm_size(MPI_COMM_WORLD, &numprocs);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

printf("Hello from process %d out %d\n", rank, numprocs);

MPI_Finalize();

}

Compile it with:

$ mpicc -Wall hello_mpi.c -o hello_mpi

Run it with 8 processes (change to suit your own architecture):

$ mpirun -np 8 ./hello_mpi

Output:

Hello from process 6 of 8

Hello from process 0 of 8

Hello from process 2 of 8

Hello from process 1 of 8

Hello from process 5 of 8

Hello from process 3 of 8

Hello from process 7 of 8

Hello from process 4 of 8

Okay, now that we have MPI running, lets incorporate some OpenMP.

hybrid_hello.c

#include <stdio.h>

#include "mpi.h"

#include <omp.h>

int main(int argc, char *argv[]) {

int numprocs;

int rank;

int tid = 0;

int np = 1;

MPI_Init(&argc, &argv);

MPI_Comm_size(MPI_COMM_WORLD, &numprocs);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

#pragma omp parallel default(shared) private(tid, np)

{

np = omp_get_num_threads();

tid = omp_get_thread_num();

printf("Hello from thread %2d out of %d from process %d\n",

tid, np, rank);

}

MPI_Finalize();

}

The main additions to this code are the inclusion of the omp.h library which allows us to use the #pragma omp parallel worksharing constructs. We also need to add the -fopenmp flag during compilation.

$ mpicc -Wall -fopenmp hybrid_hello.c -o hybrid_hello

Let’s see what a run on my local machine with 16 hardware threads looks like. We’ll run with only 2 processes so we dont have 200 threads saying hello.

$ mpirun -np 2 ./hybrid_hello

Hello from thread 15 out of 16 from process 1

Hello from thread 3 out of 16 from process 1

Hello from thread 6 out of 16 from process 1

Hello from thread 15 out of 16 from process 0

Hello from thread 9 out of 16 from process 0

Hello from thread 3 out of 16 from process 0

Hello from thread 8 out of 16 from process 1

Hello from thread 6 out of 16 from process 0

Hello from thread 0 out of 16 from process 0

Hello from thread 10 out of 16 from process 0

Hello from thread 5 out of 16 from process 0

Hello from thread 4 out of 16 from process 0

Hello from thread 4 out of 16 from process 1

Hello from thread 1 out of 16 from process 0

Hello from thread 7 out of 16 from process 1

Hello from thread 11 out of 16 from process 0

Hello from thread 7 out of 16 from process 0

Hello from thread 13 out of 16 from process 0

Hello from thread 14 out of 16 from process 1

Hello from thread 14 out of 16 from process 0

Hello from thread 8 out of 16 from process 0

Hello from thread 10 out of 16 from process 1

Hello from thread 2 out of 16 from process 0

Hello from thread 5 out of 16 from process 1

Hello from thread 13 out of 16 from process 1

Hello from thread 2 out of 16 from process 1

Hello from thread 11 out of 16 from process 1

Hello from thread 1 out of 16 from process 1

Hello from thread 9 out of 16 from process 1

Hello from thread 12 out of 16 from process 0

Hello from thread 12 out of 16 from process 1

Hello from thread 0 out of 16 from process 1

Not too exciting, but it shows that we have all the pieces working and can use these tools to solve some more interesting problems.

Stay tuned for more posts about hybrid programming with MPI and OpenMP!

Thanks for Reading! --- @avcourt

Questions? Join my Discord server => discord.gg/5PfXqqr

Follow me on Twitter! => @avcourt